Your scientists were so preoccupied with whether or not they could, they didn’t stop to think if they should.

2024 Update

This post was originally published in 2020. Today, in 2024, the FORTRAN compiler landscape has changed quite a bit – while FORTRAN still does not enjoy first-class WebAssembly support in the LLVM toolchain, it is now possible to set up a much more sane toolchain by applying a few patches to LLVM.

Dr George W Stagg has published an excellent writeup of his LLVM modifications, and the application of the resulting toolchain to several real-world projects. Specifically, the WebR project and a cool BLAS-based in-browser digit classifier.

Now, back to 2020 …

I wanted to learn more about WebAssembly (Wasm), the new and cool kid in town. When testing out a new technology, you should always know why you are doing this and what you want to achieve. Except sometimes, it is just way more enjoyable to just sit back and watch the world burn.

What is something so insane, that it might just be useful again? The answer of course follows the long tradition of using already asinine and/or arcane technologies in domains they were not designed for. Nodejs? Already done. No. We need something truly Webscale. Enter: Full-Stack FORTRAN.

Why Fortran

Fortran is one of the oldest programming languages, preceding even C. It is a compiled language mostly used in high performance computing and for scientific simulations. It excels in number crunching and support for distributing work in a cluster (OpenMP, MPI etc.), but pretty much cant do anything else. Think C99 without the many libraries. Text processing? Possible, but cumbersome. Networking? Well, you could bind to the C library … you see where this is going.

I have been using Fortran for multiple years now in the context of geoscientific simulations in HPC. But I am also a web developer. Now it is time to join both of these worlds.

Why the Browser

All memes aside, why am I spending time on this project? There are many excellent and battle-tested math libraries written in Fortran, for example BLAS and LAPACK/LINPACK on which higher-level frameworks like Matlab or SciPy depend. Wouldn’t it be awesome to use these technologies in the Browser? Think client-side Jupyter Notebooks, like Iodide.

But, baby steps. To begin, I wanted to build a small program running in the browser with its core written in Fortran.

Chapter 1: Building A Toolchain

A slow descent into madness

The most popular toolchain for Wasm is Emscripten with Binaryen. It provides compilers based on LLVM and a good part of the C standard library ported to Wasm and JavaScript. For example, you can write to the standard output and it will show up in the browser console. Or you can access files and the browsers’ local storage will be used.

Unfortunately Emscripten does not support Fortran. Why? First of all, most platforms Fortran is used on are 64 bit, 128 bit or something else entirely. Wasm on the other hand must be compiled as 32 Bit, the 64 Bit variant is not yet implemented in the browsers or compilers, nor is the specification complete. For code that does not use any pointers (lol), this problem can be mostly ignored, but really we should find a 32 bit capable compiler.

The next problem is the lack of a standard library. Fortran was not invented with portability in mind, so most compilers provide and link their own custom library.

I isolated about 4 different ways to approach the compilation of Fortran to Wasm, all of which depend on LLVM.

Use LFortran

LFortran is a very recent and very alpha project which aims to build an interactive compiler for the use in REPLs. However, it can also be used as a traditional compiler. It is based on LLVM and thus should be able to emit 32 bit Wasm.

At the time of writing it is missing a lot of features and only supports a very small subset of Fortran.

You can find my tests using LFortran here: Full-Stack-Fortran/tree/lfortran.

Use Flang-7

Flang-7 is a compiler based on the PGI compiler. It is also based on LLVM and able to emit LLVM IR as well as build binaries. Sadly, it only compiles to 64 bit.

It is still usable for small code examples, but pointers will be 64 bit which makes interfacing with any library hard.

You can find my tests using Flang-7 here: Full-Stack-Fortran/tree/flang-7.

Use F18

F18 is the new Fortran frontend for LLVM, which will eventually replace flang-7. It is currently in beta, and missed the release window for LLVM 10.0. At the time of writing, it does not support code generation yet, and instead only provides a parser. It compiles code by parsing it, converting the parse tree back to Fortran, and then passing that code to an external Fortran compiler. By default this is the PGI compiler.

During testing, I found that the PGI compiler was able to emit LLVM IR. When I used gfortran as a backend, only regular assembly code was emitted. This makes me think that the PGI compiler internally uses LLVM, which would make sense because flang-7 and f18 do so as well.

Now, the bitness depends on the backend compiler used. The PGI compiler stopped supporting 32 bit in 2017, and only the most recent version is freely available in form of a community license.

You can find my tests using F18 here: Full-Stack-Fortran/tree/f18.

Use Dragonegg

Dragonegg is a plugin for the GNU compilers. It uses GCC as the frontend and compiles the GCCs IR (GIMPLE) via LLVM. Thus it is able to emit LLVM IR or even compile to wasm.

Dragonegg is pretty old. the last supported versions of GCC and LLVM are ggc-4.6 (maybe 4.8) and llvm-3.3. This is bad because LLVM 3.3 does not support wasm and the IR format of LLVM 3.3 is not compatible with the newer versions of LLVM that do support wasm.

There are forks of dragonegg on GitHub, which claim to support more recent versions of LLVM and GCC. The only fork I got to compile is this, which is a fork of this (which claims to support GCC 8 and LLVM 6), which is a fork of the original. This did in fact emit compatible IR, but did not include any code from my functions. I have to investigate this further, maybe some stage optimizes away all user code because it is detected as unreachable.

After some time, I found out that while the textual representation of the LLVM IR was incompatible between LLVM 3.3 and >3.7, the binary bitcode was still compatible.

This was the toolchain I ended up using. You can find my various test here: Full-Stack-Fortran/tree/dragonegg, and here: Full-Stack-Fortran/tree/dragonegg-old. The production version resides in master: Full-Stack-Fortran/tree/master.

Use AOCC

The AOCC by AMD is a LLVM based compiler with extended optimization support for AMD platforms like Ryzen. It includes a flang-7 version. It also ships with a custom version of dragonegg.

You can find my tests using AOCC here: Full-Stack-Fortran/tree/aocc.

Wait For The Future

Hopefully, the 64 bit variant of Wasm will be ready soon. Then we should be able to use most of the existing Fortran LLVM compilers.

Also, the F18 frontend should be finished soon and release possibly already with LLVM 11.0, maybe even without the dependency on an external PGI compiler?

We could also acquire a 32-Bit PGI compiler for a lot of money and hope that it is able to emit LLVM IR.

Chapter 2: Setting Up The Toolchain

Embrace The Madness

After a few days of experimentation, I chose Ubuntu in Docker with the latest Emscripten. The gfortran runtime and LAPACK library are built during the compilation of the Docker image.

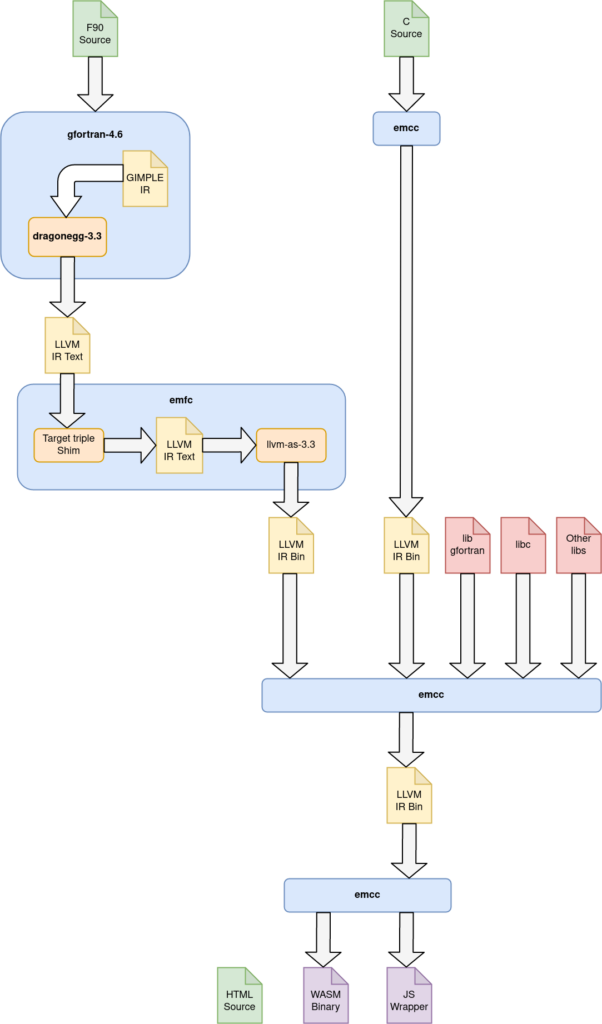

To integrate the dragonegg-gcc with the emscripten LLVM stack, I wrote a small wrapper script that acts a a Fortran to LLVM bitcode compiler.

#!/bin/bash

OUT=`echo "$@" | sed -n 's/.* -o \(.*\).*/\1/p' | cut -d " " -f1`

gfortran-4.6 -emit-llvm -S -flto -m32 -fverbose-asm -nostdlib -fplugin=/app/bin/dragonegg.so "$@" && \

sed -i 's/^\(target\sdatalayout\s*=\s*\).*$/\1"e-m:e-p:32:32-i64:64-n32:64-S128"/' "$OUT" && \

sed -i 's/^\(target\striple\s*=\s*\).*$/\1"wasm32-unknown-emscripten"/' "$OUT" && \

llvm-as-3.3 "$OUT" -o "$OUT.bc" && \

mv "$OUT" "$OUT.ll" && \

mv "$OUT.bc" "$OUT" && \

if [ -z "$KEEP_TEMPS" ]; then rm "$OUT.ll"; fiThe script also adjusts the LLVM target triplet in order for emscripten to accept the code.

For the rest of the toolchain I used the following sources:

- Ubuntu packages from 18.04 and 14.4 for the old compilers

- gcc-4.6, g++-4.6, gfortran-4.6, llvm-3.3-dev

- Dragonegg from llvm-mirror/dragonegg.git

- Branch: release_33

- Emscripten from emscripten-core/emsdk.git

- Version: 1.39.12

- gFortran library from gcc-mirror/gcc.git

- Branch: releases/gcc-4.6

- LAPACK from Reference-LAPACK/lapack.git

- Branch: master

The dockerfile consists of two stages, in order to keep the size of the image down. To begin, it sets up Ubuntu and installs the required packages:

FROM ubuntu:18.04 as build

ENV DEBIAN_FRONTEND=noninteractive TZ=Europe/Berlin

RUN echo "deb http://archive.ubuntu.com/ubuntu/ trusty main restricted universe" >> /etc/apt/sources.list && \

echo "deb http://security.ubuntu.com/ubuntu/ trusty-security main" >> /etc/apt/sources.list && \

apt-get update && \

apt-get -y install --no-install-recommends \

build-essential python wget git gnutls-bin bash make ca-certificates xz-utils \

gfortran-4.6 g++-4.6 gcc-4.6 gcc-4.6-plugin-dev llvm-3.3-dev && \

rm -rf /var/lib/apt/lists/*

WORKDIR /appThen, the toolchain elements are downloaded and built one after another:

# dragonegg

RUN git clone --single-branch --branch="release_33" --depth=1 https://github.com/llvm-mirror/dragonegg.git && \

cd dragonegg && \

LLVM_CONFIG=llvm-config-3.3 GCC=gcc-4.6 CC=gcc-4.6 CXX=g++-4.6 make -j$(nproc) && \

mkdir -p /app/bin && \

mv dragonegg.so /app/bin/

# emscripten

RUN git clone --depth=1 https://github.com/emscripten-core/emsdk.git && \

cd emsdk && \

./emsdk install "1.39.12" && \

./emsdk activate "1.39.12"

COPY scripts /app/scripts

ENV PATH="/app/bin:/app/scripts:/app/emsdk:/app/emsdk/node/12.9.1_64bit/bin:/app/emsdk/upstream/emscripten:${PATH}"

# libgfortran

RUN git clone --single-branch --branch="releases/gcc-4.6" --depth=1 https://github.com/gcc-mirror/gcc.git

COPY vendor/gfortran gcc-build

RUN cd gcc-build && \

./patch.sh && \

make -j$(nproc) build

# lapack

RUN git clone --depth=1 https://github.com/Reference-LAPACK/lapack.git

COPY vendor/LAPACK/make.inc lapack/make.inc

RUN cd lapack/SRC && \

emmake make -j$(nproc) single doubleThe second stage just installs the binary packages instead of the development versions, and copies the generated assets from the first stage:

FROM ubuntu:18.04 as tool

ENV DEBIAN_FRONTEND=noninteractive TZ=Europe/Berlin

RUN echo "deb http://archive.ubuntu.com/ubuntu/ trusty main restricted universe" >> /etc/apt/sources.list && \

echo "deb http://security.ubuntu.com/ubuntu/ trusty-security main" >> /etc/apt/sources.list && \

apt-get update && \

apt-get -y install --no-install-recommends python bash make cmake gfortran-4.6 llvm-3.3 && \

rm -rf /var/lib/apt/lists/*

COPY --from=build /app/bin /app/bin/

COPY --from=build /app/emsdk /app/emsdk

COPY --from=build /root/.emscripten /root/.emscripten

COPY --from=build /app/gcc-build/bin/libgfortran.a /app/lapack/*.a /app/lib/

COPY scripts /app/scripts

ENV PATH="/app/bin:/app/scripts:/app/emsdk:/app/emsdk/node/12.9.1_64bit/bin:/app/emsdk/upstream/emscripten:${PATH}"

ENV LIBRARY_PATH="/app/lib:${LIBRARY_PATH}"

WORKDIR /appJust like that, the toolchain is ready:

The Standard Library

I actually got the flang-7 runtime library to compile to wasm, but I did not end up using it. Because Emscripten uses musl, a few patches were needed for the flang runtime. Also, I modified the CMake definitions to only build the libraries without bootstrapping and building the compiler itself. I also removed AIO/threads support, even though this might be possible using Emscriptens’ pthread. The library only gets compiled to LLVM IR, to be used by Emscripten later on. Check it out here: https://github.com/StarGate01/flang

Instead, I managed to compile the entirety of libgfortran to LLVM IR. I had to jump a few hoops, but together with emscriptens’ libc this provides a complete gFortran runtime in the browser.

Because Wasm only supports a maximum float size of 64 bit, quad-precision math is not supported. Also, complex numbers wont compile that well (yet, this is under investigation). Because I could not find a way to actually bootstrap the compiler in wasm, I built libgfortran “out-of-tree”, using my own makefile instead of using the provided autotools scripts. I adjusted the required configuration files by hand.

- config.h defines the libc environment

- kinds.h, selected_int_kind.inc and selected_real_kind.inc define available numeric types

The makefile just gathers all sources and compiles them using the correct compilers. After that, it packs them into a static library archive.

.PHONY: build

SRCPATH:=/app/gcc/libgfortran

BLDPATH:=/app/gcc-build/bin

FC:=emfc.sh

CC:=emcc

AR:=emar

RANLIB:=emranlib

INCPATH:=-I$(SRCPATH) -I$(SRCPATH)/config -I$(SRCPATH)/.. -I$(SRCPATH)/../.. -I$(SRCPATH)/../libgcc -I$(SRCPATH)/../gcc

FCFLAGS:=-O3 -Wall -I$(BLDPATH) -fallow-leading-underscore $(INCPATH)

CCFLAGS:=--target=wasm32-unknown-emscripten -O3 -c -flto -emit-llvm -m32 -Isrc -Wall $(INCPATH)

ARFLAGS:=cr

# Gather sources

FSRCS:=$(wildcard $(SRCPATH)/generated/*.F90) $(wildcard $(SRCPATH)/intrinsics/*.F90) \

$(wildcard $(SRCPATH)/generated/*.f90) $(wildcard $(SRCPATH)/intrinsics/*.f90)

CSRCS:=$(filter-out $(wildcard $(SRCPATH)/runtime/*_inc.c),$(wildcard $(SRCPATH)/runtime/*.c)) \

$(filter-out $(wildcard $(SRCPATH)/intrinsics/*_inc.c),$(wildcard $(SRCPATH)/intrinsics/*.c)) \

$(wildcard $(SRCPATH)/generated/*.c) $(wildcard $(SRCPATH)/io/*.c) \

$(SRCPATH)/fmain.c

FBCS:=$(FSRCS:$(SRCPATH)/%=$(BLDPATH)/%.bc)

CBCS:=$(CSRCS:$(SRCPATH)/%=$(BLDPATH)/%.bc)

LIB:=$(BLDPATH)/libgfortran.a

# Control targets

build: dirs $(LIB)

dirs:

cd $(SRCPATH) && \

find . -type d -exec mkdir -p -- $(BLDPATH)/{} \;

# Fortran 70 and 95

$(BLDPATH)/%.f90.bc: $(SRCPATH)/%.f90

$(FC) $(FCFLAGS) $(MODDIRS) -J $(dir $@) -o $@ -c $<

$(BLDPATH)/%.F90.bc: $(SRCPATH)/%.F90

$(FC) $(FCFLAGS) $(MODDIRS) -J $(dir $@) -o $@ -c $<

$(BLDPATH)/%.f.bc: $(SRCPATH)/%.f

$(FC) $(FCFLAGS) $(MODDIRS) -J $(dir $@) -o $@ -c $<

# Regular C

$(BLDPATH)/%.c.bc: $(SRCPATH)/%.c

$(CC) $(CCFLAGS) -o $@ $<

# Archive

$(LIB): $(CBCS) $(FBCS)

$(AR) $(ARFLAGS) $@ $^

$(RANLIB) $@This yields a ~3MB libgfortran.a library, about the same size as the one on my x86_64 PC. However, when building against this library, only the code actually used gets included in the final binary (link-time-optimization). A reasonable Fortran program (the LAPACK tests) together with libc, the emscripten adapters and libgfortran is about 400KB in size.

Chapter 3: Projects

A Simple Example

The aim for my first test was to compile a simple function, and call it from JavaScript. To compile my test code, I used a very similar makefile as above.

module test

implicit none

contains

function add(a, b) result(res)

integer, value, intent(in) :: a, b

integer :: res

write(*,*) "Hello from Fortran!"

res = a * b

end function

end module

And rejoice, The development console displays Hello from Fortran!. This means that the gFortran runtime and its binding to libc work as expected.

To call this function from JavaScript, Emscripten helps us a lot:

<!DOCTYPE html>

<html>

<head>

<meta charset="UTF-8" />

<title>Fortran WASM Test</title>

</head>

<body>

<script src="assembly.js"></script>

<script>

let module = Module();

module.onRuntimeInitialized = _ => {

const add = module.cwrap('__test_MOD_add', 'number', ['number', 'number']);

console.log("call from javascript: " + add(5, 7));

};

</script>

</body>

</html>Calling the C Library

Next up, I wanted to call functions implemented in C. I wrote a small C library:

#include <stdio.h>

int iolib_test (int a) {

printf("Hello from C!\n");

return a * 10;

}Then I wrote a binding in Fortran to this function, and to various other libc functions like write. Because we actually have a runtime, the binding is really easy due to iso_c_binding.

module iolib

use, intrinsic :: iso_c_binding

implicit none

interface

integer(C_INT32_T) function iolib_write_c(fd, buf, count) bind(C, name='write')

use, intrinsic :: iso_c_binding

implicit none

integer(C_INT32_T), value, intent(in) :: fd, count

character(kind=c_char), intent(in) :: buf

end function

integer(C_INT32_T) function iolib_test(var) bind(C, name='iolib_test')

use, intrinsic :: iso_c_binding

implicit none

integer(C_INT32_T), value, intent(in) :: var

end function

end interface

contains

subroutine iolib_write(string)

character(*), intent(in), target :: string

integer(C_INT32_T) :: res

res = iolib_write_c(1_C_INT32_T, string, int(len(string), C_INT32_T))

end subroutine

end moduleCalling the function is easy:

module test

use iolib

implicit none

contains

function add(a, b) result(res)

integer, value, intent(in) :: a, b

integer :: res

call iolib_write("Hello from Fortran via libc!"//char(10))

res = a * b + iolib_test(a)

end function

end module

Of course, the makefile has to be adjusted to build and link the object files correctly, it is still very similar to the gFortran makefile.

Using LAPACK and BLAS

To truly test the runtime, I compiled the compatible parts of LAPACK and BLAS. The makefile had to be correctly configured, but then it compiled just fine for single and double precision.

Update: It now compiles for complex numbers as well.

I found some test codes at people.sc.fsu.edu/~jburkardt/f77_src/lapack_examples/lapack_examples.html and people.sc.fsu.edu/~jburkardt/f_src/lapack_examples/lapack_examples.html by John Burkardt. I used those to test various LAPACK/BLAS routines.

Quad-precision numeric support is still unavailable, but the rest works just fine.

While you were reading this article, the test scripts have already run. So open up the developer console and check it out!

Chapter 4: Future Work

- I want to write a small simulation with a graphical (WebGL or canvas) output. Stay tuned!

- Improve support for complex datatypes (See here)

- Bring down the size of the binaries

- Integrate this stack into existing projects that need it

Chapter 5: Useful Downloads

All of the source code from this article, and the toolchain Docker image:

- https://github.com/StarGate01/Full-Stack-Fortran (Includes releases)

- https://hub.docker.com/r/stargate01/f90wasm

- https://projects.chrz.de/f90wasm/ demo page, or this page

Thanks to:

- http://www.netlib.org/lapack/

- https://gcc.gnu.org/

- https://llvm.org/

- https://emscripten.org/

- https://github.com/smikes/femscripten

- https://gws.phd/posts/fortran_wasm/

Image source: https://dev.to/rly

> “It excels in number crunching and support for distributing work in a cluster (OpenMP, MPI etc.), but pretty much cant do anything else. Think C99 without the many libraries. ”

Alas, this was one great thing about good ol’ VAX/VMS (later rechristened OpenVMS and ported to other, somewhat… less satisfactory… hardware): the system, and the multitude of programming languages available for it (including BASIC, Pascal, FORTRAN, COBOL, ADA, assembler, C, and a VMS-specific language named BLISS — to name just those that I’m personally aware of for sure), were specifically designed to easily interoperate, with the system runtime libraries (of which there were many) and each other’s native code. The only part that was even the slightest bit tricky was getting some complicated system data structures declared just right in each language — but the system documentation was superb and extremely thorough, and included a nice manual that showed exactly how to do it in every conceivable language. My final project in college, where we used VAX/VMS, had a mainline written in COBOL, internal data-management in (ouch) Pascal, a “get key” routine in assembler, and the user interface implemented through a system “Forms Management” library. And it was dead easy. I used VAX/VMS for three years in college, and for nearly ten years at my first job. Loved it.

Years later, upon entering the worlds of *nix and Windows, I was horrified, and deeply disappointed, to find that other operating systems didn’t provide these capabilities, which would have been easy if the vendors had just planned ahead for them, set forth standards to third-party compiler vendors, and so forth; that it was nearly impossible to write programs “partly in one language, and partly in another”; and that all the users and developers simply took this state of affairs for granted! My God, the compilers didn’t even generate compilation listings — let alone listings showing the machine language generated for each source statement! (Today’s programmers may sneer at such a though — “who needs it?!?” — but it proved very useful to know such things. I once accidentally deleted the only copy of the Fortran source code for an important subroutine, but still had the compiled machine code. Having long become familiar with the code generated by the Fortran compiler, I was able to reverse-compile the machine code back to source…. which, when compiled afresh, turned out to generate exactly the same machine instruction stream as the original, by way of confirmation. Try THAT in any language today. Hint: you can still do it, it just isn’t nearly so straightforward.)

So, I have to wryly smirk, and sadly laugh, when articles do occasionally remark upon the “fact” that certain languages “can’t” do certain things. If the compilers and libraries were properly designed, every language could do everything. Been there, done that.

This was a fun read. FORTRAN is a great scientific computing language, and it would be neat to have some software similar to Jupyter notebooks where you can write it.

@Christopher Chiesa – actually .net decompiles very cleanly and has since the very beginning from my understanding… https://github.com/dnSpy/dnSpy is amazing… this great benefit comes in handy for me on a regular basis, very similar to how you describe recovering a source delete… e.g. getting something binary that represents useful work yet somehow not quite good enough as-is, just decompile and hack it to my will =)

I noticed that you posted this on 4/21. Did you post these results the day after you did the actual work, on 4/20? I. e., were you high when you did this? Because this is amazing.

The only reason I still use C rather than Fortran is because the one last thing that C can do that Fortran can’t for me is compile to WebAssembly; you cleared the final obstacle for me! Will be trying to replicate your achievement in the next few months and see where the roadblocks are in building a complete Flang-to-LLVM-to-wasm toolchain that is as complete as Alon Zakai’s excellent Emscripten. Thank you so much for making 2020 not suck (or at least suck less:)

Glad you like it! I’d call the publishing date a fitting, although unintended coincidence 😉 . As for your project, Emscripten support should be a lot easier to implement once the new Flang interface in LLVM reaches maturity.

Hi, glad to see your excellent work! We now are working on compiling ceres solver into wasm. However, we need a lapack which should support complex. And I want to know did you have any furthur achievement on it? Or can you give us some suggestions like using flang-11?

Hi, unfortunately I did not yet continue this toolchain. In the long term, you best bet would be to wait for proper Fortran support in LLVM (the flang-11 module is not yet capable of emitting IR or bytecode).

In theory, it should be possible to compile complex number support using the existing toolchain, but I did not yet figure out how – however I plan to look into this again.

If you want to track this problem or continue the discussion, I created an issue on GitHub: https://github.com/StarGate01/Full-Stack-Fortran/issues/2 .

Hi, just a quick update: I managed to compile BLAS and LAPACK with support for complex numbers, and it seems to work.

However, complex Fortran intrinsics are still causing issues, see the GitHub link.

If you just want to use the LAPACK or BLAS primitives from C or C++, it should be possible to link against the pre-compiled binaries contained in the Docker image.